ABSTRACT

This paper describes the Ubiq-Genie framework for integrating external frameworks with the Ubiq social VR platform. The proposed architecture is modular, allowing for easy integration of services and providing mechanisms to offload computationally intensive processes to a server. To showcase the capabilities of the framework, we present two prototype applications: 1) a voice- and gesture-controlled texture generation method based on Stable Diffusion 2.0 and 2) an embodied conversational agent based on ChatGPT. This work aims to demonstrate the potential of integrating external frameworks into social VR for the creation of new types of collaborative experiences.

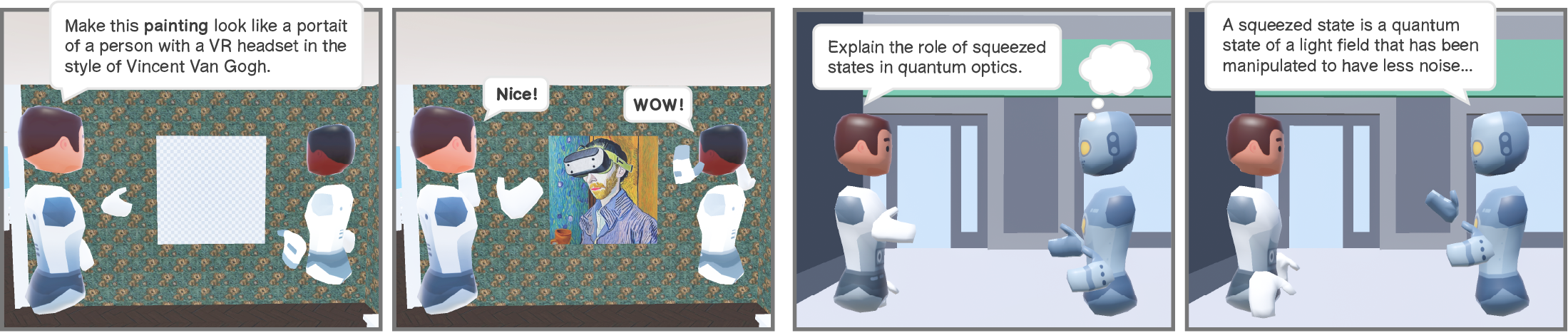

Overview of the two prototypes of collaborative applications presented in this paper that are based on Ubiq-Genie. Left: a voice-controlled texture generation method based on the diffusion-based image synthesis model Stable Diffusion 2.0. Right: a voice-controlled conversational agent based on the text synthesis tool ChatGPT.

VIDEO

CITING

@inproceedings{numanUbiqGenieLeveragingExternal2023,

title = {Ubiq-{{Genie}}: {{Leveraging External Frameworks}} for {{Enhanced Social VR Experiences}}},

booktitle = {2023 {{IEEE Conference}} on {{Virtual Reality}} and {{3D User Interfaces Abstracts}} and {{Workshops}} ({{VRW}})},

author = {Numan, Nels and Giunchi, Daniele and Congdon, Benjamin and Steed, Anthony},

year = {2023},

pages = {497--501},

location = {{Shanghai, China}},

doi = {10.1109/VRW58643.2023.00108},

}