ABSTRACT

Virtual Reality (VR) has revolutionized how we interact with digital worlds. However, programming for VR remains a complex and challenging task, requiring specialized skills and knowledge. Powered by large language models (LLMs), DreamCodeVR is designed to assist users, irrespective of their coding skills, in crafting basic object behavior in VR environments by translating spoken language into code within an active application. This approach seeks to simplify the process of defining behaviors and visual changes through speech. Our preliminary user study indicated that the system’s speech interface supports elementary programming tasks, highlighting its potential to improve accessibility for users with varying technical skills. However, it also uncovered a wide range of challenges and opportunities. In an extensive discussion, we detail the system’s strengths, weaknesses, and areas for future research.

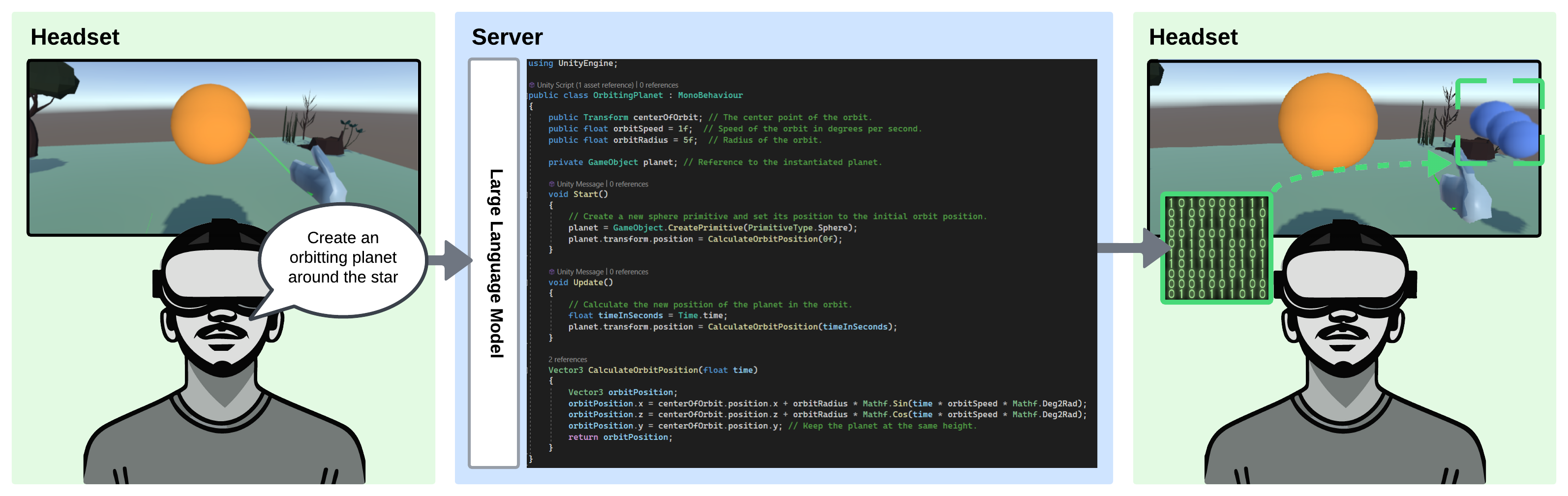

Conceptual overview of DreamCodeVR – an AI-code-based behavior generator triggered by user’s speech that can change the appearance, behavior and state of a live running Unity VR application.

CITING

@inproceedings{giunchiDreamCodeVRDemocratizingBehavior2024,

title = {{{DreamCodeVR}}: {{Towards Democratizing Behavior Design}} in {{Virtual Reality}} with {{Speech-Driven Programming}}},

booktitle = {2024 {{IEEE Conference Virtual Reality}} and {{3D User Interfaces}} ({{VR}})},

author = {Giunchi, Daniele and Numan, Nels and Gatti, Elia and Steed, Anthony},

publisher = {IEEE},

address = {Orlando, USA},

year = {2024},

doi = {10.1109/VR58804.2024.00078}

}